#003👩🔬 The Near Future: Spatial Computing, Animism and the Artists' Dreams

An open love letter to Liquid City ✨

An Infinite Space for Imaginations

Before I began my exploration of a ‘grid-free’ life—dirtbagging in the desert, road-tripping with a backpack to visit off-grid communities, diving into DAOs and new cities—I had a career in XR.

XR stands for Extended Reality: the umbrella term for augmented, virtual, and mixed reality technologies.

I fell in love with building virtual worlds. It was this infinite space where imaginations run limitlessly. In college, I would spend hours squatting on my work chair, building mini games in Unity and 3D modeling in Blender, having so much fun and losing time.

The Philosophy of Animated Reality

I started an LLC called Animated Reality. It is a double entendre, 1 is that through extending reality, we are also making reality animated, 2 is that reality has always been animated.

The core philosophy is that XR technology revives the feeling of animism for the modern world.

The truth is, ancient cultures have always lived with a belief of an enchanted world. We gave names and meaning to rivers, saw gods in the wind and mountains, and treated the world as alive. As modern humans, we simply forgot about this lens.

XR animates reality — as if magic were real. Newtonian physics doesn’t apply to virtual space. Pokémon roam the streets; mundane objects jump around and talk to you. Your apartment can bloom into a garden with a gesture. Reality becomes enchanted.

After my long soul-searching break, I got back to making XR things again, this time with a refreshed perspective. Thanks to Monaverse for an artist grant, I was able to create a mini game ‘Everything is Alive’ to be released under Animated Reality. It’s a beat-making game where you turn plants and animals into fun instruments and sequence sounds with them.

My Disenchantment of XR

For a while, game development was my first love. I was obsessed. But at some point—maybe because I’m not 19 anymore—I began feeling the toll of screen work. My vision got worse. My body ached after long hours at the screen.

To pay the bills, I sometimes had to work on XR experiences for luxury brands and social influencers, which made me feel quite empty inside. I kept thinking…

I really only have one life. Why spend it supporting a version of the world I don’t even want to live in?

A world of virtual try-ons and overpriced handbags. Of metaverse billboards and brand activations. Of glossy surfaces with no soul. I stopped building for virtual worlds because I stopped wanting to be inside virtual worlds. I wanted to be in the real world, look at people in their eyes, and touch grass.

Over the years, I’ve learned that things aren’t all that black and white.

Tech is part of our evolution—it’s encoded in our genes. The question is: how do we design technology that supports our well-being? That brings us closer to life, not farther away? I realized that a Solarpunk mindset — a vision of technology in harmony with nature — can exist within tech companies and hardware labs, not just outside of them.

MUD/WTR makes people drink less coffee; LMNT provides sugar-free hydration; Solar panels alleviate our dependency on petroleum; Pokémon Go got millions of people off the couch and into the streets; Daylight Computer aims to bring the workspace closer to nature.

Technology might be the cause of our disconnection. But it’s also one of the only ways we might save ourselves.

Liquid Glass and an Augmented World

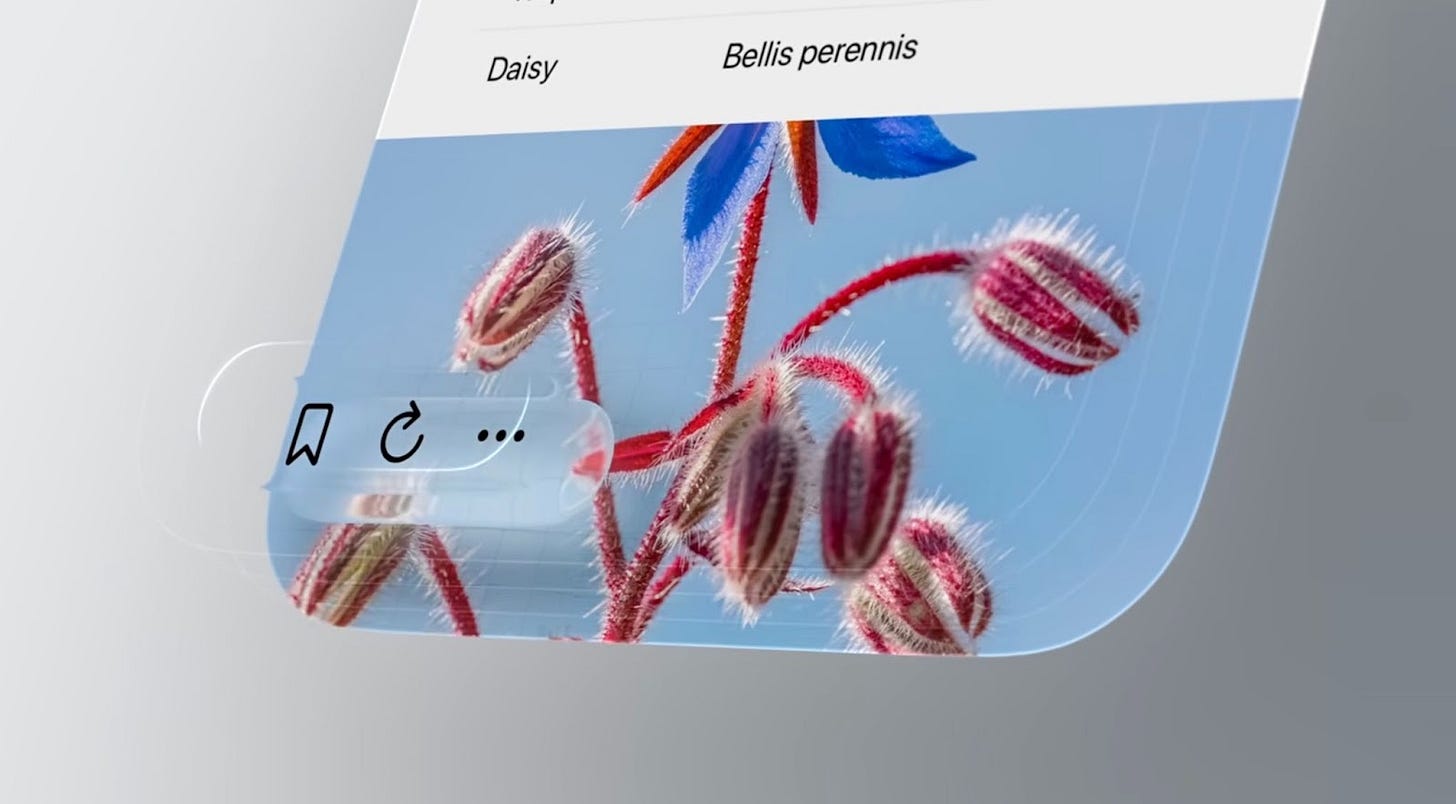

Apple’s new Liquid Glass design direction, announced at WWDC this month, is sleek, elegant, minimal, and also emphasizes the three-dimensional. It marks a shift: a subtle heralding of the spatial computing age.

When Apple introduced iOS 7 in 2013, people complained about the loss of skeuomorphic shading. But can you imagine going back now?

Apple plays the long game. These design directions are chess moves to pave the road to their products.

We’re heading toward a world of BCI, spatial interfaces, and AI agents. We won’t need to interact with screens. Our tools will be embodied, intelligent, and all around us. The era of spatial computing is arriving, whether we like it or not.

Just as ChatGPT and Midjourney hit an inflection point in late 2022 after decades of ML research, XR too will have its moment. It’s still niche (and dorky) now, but not forever.

A World Without Screens

One of the most influential XR films to date is HYPER-REALITY (2016) by Keiichi Matsuda—a chaotic vision of a future saturated with digital overlays, branding, gamification, and virtual agency.

In 2019, Keiichi released an essay The Liquid City, describing a future where physical reality and imagination ‘melt’ into one another, and technology becomes an extension of our senses.

The Liquid City will respond to the movement of your body, even the signals coming from your brain. This embodied interaction changes our relationship to technology; rather than devices that you control with a keyboard or touchscreen, technology is framed more as an extension of one’s own abilities. It can be seen as a new sense or a set of supernatural powers that extend your awareness and agency in the physical world around you.

Keiichi Matsuda founded Liquid City as ‘a design and prototyping studio, with a focus on shaping a positive future for technology in society.’ Most recently, Liquid City released a short film Agents, a collaboration with Niantic Labs, describing a future where AI agents are powered by spatial computing and live among our current worlds, that helps us improve our lives in various ways.

Seriously, this video is so adorable and deeply insightful!

Working with Liquid City

In late 2024, I got an email from Liquid City asking if I was available to work as an XR developer. I couldn’t believe it, I had been quietly admiring their work for years!

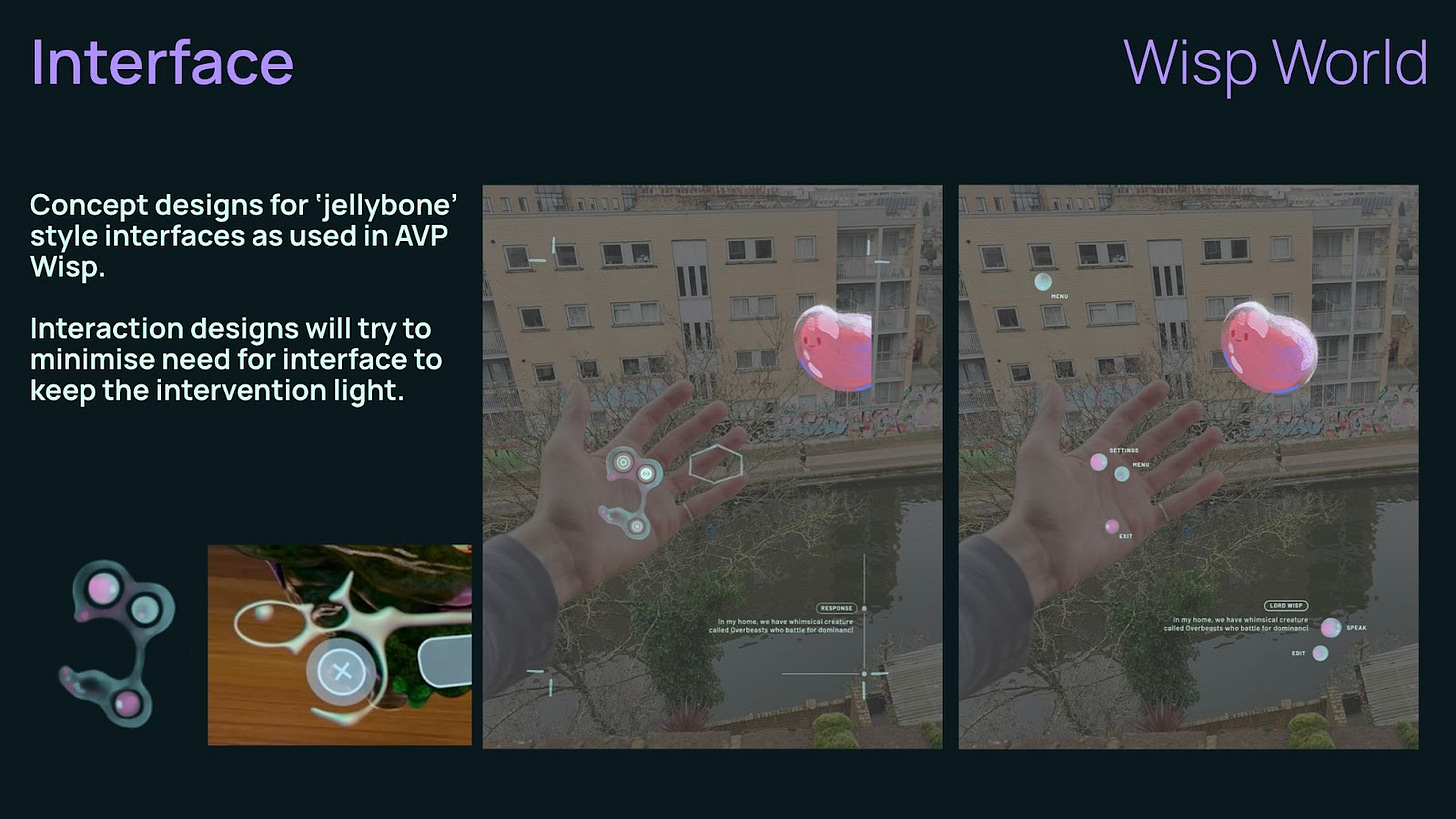

We started collaborating on a new game called Wisp World, built for the Snapchat Spectacles, a continuation of Wisp World on Apple Vision Pro.

It wasn’t easy at the beginning. I hadn’t built a full XR project in a while, and Snap had just transitioned Lens Studio to TypeScript. I barely knew it, but I was confident I could figure it out. AI couldn’t help much because the ecosystem was too new. So I learned by doing.

Working at the frontier is challenging for various reasons. There’s just not a lot of formula to follow. You have to get really scrappy.

Unironically, I probably spent 15+ hours in total just waiting for the AR glasses to restart.

‘It felt like an old vending machine where you had to bang on it to get the thing to come out’,

Said Lachlan, the (seriously, very brilliant) creative technologist at Liquid City.

And yet—we made something beautiful.

In Wisp World, players interact with floating alien creatures called Wisps, they have been separated by a storm. Your job is to reunite them by talking to each Wisp, learning clues, and seeking out others in your physical space. Here’s a snippet of the Wisp World gameplay.

Before I joined, the concept art, storyline, and conversation engine had already been developed by the Liquid City team.

It reminded me that it’s possible to build insightful, helpful, and soulful stories with XR that stirs wonder, inquisition, rather than fame or distraction.

This game takes your curiosity on an adventure, leading you to explore your rooms and the outside, touch flowers and scan trees, while talking with these ‘Wisps’ who make quirky comments on the things you see.

It also made me reflect on the half-finished games I’ve made over the years—most of them still sitting in forgotten GitHub repos. Only three (out of the… 30?) ever made it to the public.

We underestimate how much work it takes to finish something. Estimately almost 90% of indie games never get finished, and possibly as much as 60% of the content people created for AAA games was ultimately cut or never released.

Shipping is hard. Shipping soulful work is even harder. Liquid City managed to do both.

What’s Next?

Here’s a fun prompt I’ve been pondering:

What happens when Brain-Computer Interfaces (BCI) become common?

If you can think of something and it instantly becomes real? How would we live?

How does privacy work when companies acquire read & write permission in our brains?

Would we evolve to be a telepathic society, open and accept all the dirty little secrets currently stored in the deepest part of our private brain? Or would we become more enslaved by the algorithms?

How does the attention economy work?

Maybe we’ll all need mandatory mindfulness practice? (fun fact: the Indian government offers special leaves for Vipassana retreats)

These are some crumbs of thoughts and dreams I’m pondering… If you have ideas and recommendations, please share them!

Thank you for reading Grid Free Minds :) This blog is my labor of love ❤️

This was such a fun peek into your work! Chobani ad and Glitchy's redemption story had me 🥲

Amazing to see your depth of skill and knowledge in this area!

BCI is an interesting field that I think could create a great split in society - those that embrace a more technologically engineered form of connection, and those that expand their consciousness and unlock more of their biological human potential.